Running a database on EC2? Your clock could be slowing you down.

We recently found that our database machines were spending a lot of CPU time getting the time. This post is a look at what was causing it, how you can check if your machines are affected, and how to fix it.

The tl;dr is that a single command to change the Linux clocksource on our database machines cut execution times for distributed worker queries significantly. The default clocksource on many EC2 instance types is xen, which is significantly slower than it needs to be. Together with our other performance work, this helped us get a 30% reduction in 95th percentile query times over the last quarter.

Thanks to Joe Damato at packagecloud for writing a post about this last year. Remembering that post gave us a head start on finding out what was up.

Interested in learning more about Heap Engineering? Meet our team to get a feel for what it’s like to work at Heap!

Clocksources slowing down our queries

Heap’s data is stored in a Postgres cluster running on i3 instances in EC2. These are machines with large amounts of NVMe storage—they’re rated at up to 3 million IOPS, perfect for high transaction volume database use cases. Making our customers’ ad hoc analytics queries fast is a huge part of what we do. We’ve written a lot about techniques we’ve used, as well as the experimental methodology we apply to query performance.

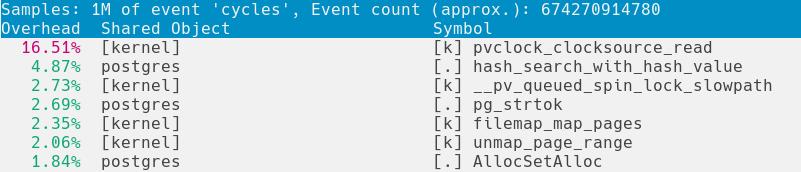

Recently, our storage team was looking at CPU profiles on our production instances, and noticed a large fraction of time being spent in pvclock_clocksource_read. The output from perf top looked like this:

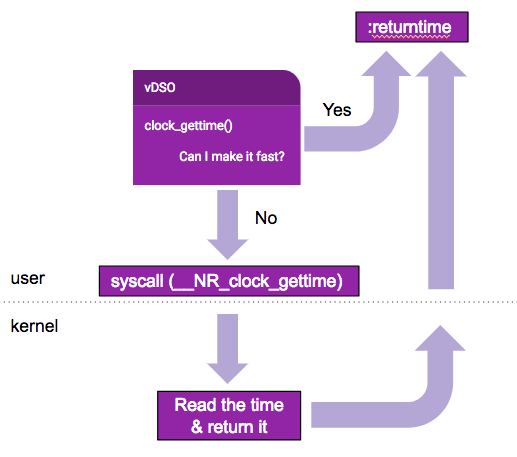

Seeing a function related to getting the time show up so high in the profile tipped us off that something might be wrong. Our servers are running complex analytical computations – why would so much of their effort go towards finding out what time it is? And in particular, we shouldn’t expect to see time-related functions showing up as kernel code. On common architectures, the vDSO has an optimised fast path for timing system calls that completely avoids the kernel.

vDSwhat?

To see what’s happening, it’s really useful to have a high level understanding of what system calls are, and how they work in Linux. Whenever a program wants the kernel to help it do something – open a file, write to the terminal, connect to your favourite source of cat gifs – it makes a system call, or syscall.

Each syscall has a number. On my system, opening a file is number 2, and connecting to a remote server is number 42. To make a syscall, a program puts that number in a special register, and then invokes the kernel to handle the syscall. This results in the CPU switching into kernel mode, changing permissions on kernel memory, and then doing whatever it needs to do to fulfill the request, changing the kernel memory permissions back, and switching back to user mode.

Doing all that has considerable overhead, and is ideally avoided where possible. To help out, the kernel injects a bit of code called the virtual dynamic shared object – or vDSO – into every running process. This serves a couple of purposes:

It provides a function called

__kernel_vsyscallwhich lets programs use the kernel’s preferred syscall mechanism.It has fast paths for some system calls, in particular

clock_gettime(2)andgettimeofday(2), completely avoiding switching to kernel mode.

For the fast paths, instead of making a “real” syscall, the timing syscalls run in userspace with no kernel mode switch. This only works for certain settings of the clocksource in Linux, though. In particular, in the 4.4 kernel we run, it doesn’t work for the default xen clocksource on our i3 instances, but it does for the tsc clocksource.

If you want to learn more about how system calls and the vDSO work, there’s a really great pair of articles on LWN, as well as another great post from the folks at packagecloud. And you can, of course, go straight to the source to see the timing call fast paths.

Linux Clocksources

To get the time, Linux has to interact with the hardware somehow. Exactly how this works depends on the hardware and its capabilities: it could be an instruction, or it could be reading from a special memory location or register. Linux has a collection of drivers to provide a common interface: the clocksource.

Your system might have multiple clocksources available; you can find the list in sysfs. For example, on my laptop I get

$ cat /sys/devices/system/clocksource/clocksource0/available_clocksource

while on an i3 instance in EC2, I get those three, plus our culprit: xen.

Xen is the hypervisor that AWS uses on a lot of its instance types. It manages one or more EC2 VMs running on a single physical server. The xen clocksource in Linux gets the time that the Xen hypervisor is running on the host. The tsc clocksource we switched to reads the time from the timestamp counter, or TSC. This is a counter on x86 CPUs that roughly corresponds to the number of clock cycles since processor start. You can read it by issuing the rdtsc or rdtscp instructions. Crucially, these are unprivileged instructions, which is why you can get the time from the tsc clocksource without an expensive switch to kernel mode. That means using tsc should be a lot faster. If we’re spending 16% of our CPU making these timing calls that could be a big deal.

Why is setting the clocksource to tsc safe?

In the packagecloud post, they write in bold:

It is not safe to switch the clocksource to

tscon EC2. It is unlikely, but possible that this can lead to unexpected backwards clock drift. Do not do this on your production systems.

So why did we make the change? And if tsc is better to use than xen, why isn’t it the default? The story here gets a bit complicated: the different clocksources have different levels of stability, frequency, and cross-processor synchronization. Since tsc directly issues an rdtsc instruction, its properties are tied to the hardware, both physical and virtual. We did some extra investigation into this, and determined that we could make this change safely in production.

Since the physical and virtual details matter, let’s find out what they are. On the physical side, AWS says that the i3 instance type uses Intel Xeon E5-2686 v4 CPUs. On the virtual side, our system boot logs tell us that they are running on a Xen 4.2 host:

$ sudo dmesg | grep -i 'xen version' [ 0.000000] Xen version 4.2.

The Xen community have an excellent document about how they handle timestamp counter emulation in Xen 4.0 and up, where they explain the two ways that backwards clock drift might happen:

on older hardware, the timestamp counter was not synchronized across processors or sockets, so a process could see backwards drift if the kernel scheduled it onto another CPU

in virtualized systems, backwards drift can happen if the VM saved and restored, or if it is live migrated to another physical host

The Xeon processors in i3 instances are from well after the rough cutoff for “older hardware”, and are TSC-safe in Xen’s terminology, so the first reason is out. We also know that our systems aren’t saved and restored – we’d get paged if they did! And, EC2 does not live migrate VMs across physical hosts. I couldn’t find anything explicit from AWS on this, but it’s something that Google is happy to point out. This means that we’re not affected by the virtualization causes either.

Taken all together, this means that those backwards clock drifts won’t happen to the timestamp counter as observed by our database instances! And AWS itself actually suggests setting the clocksource to tsc in their EC2 Instances Deep Dive slide deck.

Are timing calls slow on your machines?

There’s a pretty easy way to see if timing syscalls are on the slow path or the fast path on your machines: just strace getting the time, and see if there is a syscall or not. Below I’m using date and sending its output to /dev/null.

If the syscalls are accelerated by the vDSO, you won’t see a syscall.

$ strace -e gettimeofday,clock_gettime -- date >/dev/null

If the calls are not accelerated, you’ll see output more like this:

$ strace -e gettimeofday,clock_gettime -- date >/dev/null

+++ exited with 0 +++

The call to clock_gettime is visible to strace. This means that the process made a real syscall, and switched into kernel mode to get the time.

You can also check the clock source directly. On a Xen-based EC2 instance the clock source will be xen by default.

$ cat /sys/devices/system/clocksource/clocksource0/current_clocksource

Changing the clocksource

Changing the clock source is actually really straightforward: you write to the file in /sys/devices instead of reading from it:

$ echo tsc | sudo tee /sys/devices/system/clocksource/clocksource0/current_clocksource

Now if you check the syscalls made by date, you won’t see any:

$ strace -e gettimeofday,clock_gettime -- date >/dev/null

There’s no need to reboot for it to take effect, but you may want to ensure this is set on every boot, for example via your init system, or on the kernel commandline.

Does it actually matter?

Naturally, the answer is that it depends on your workload. With our analysis workload on Postgres, the answer was a resounding yes. And given how little time it takes, you could just check—you’ve spent about as much time reading to this point in the post!

On our database machines, the effects were exacerbated by us collecting detailed timing information on query execution so that we can keep improving performance. This meant that Postgres was making a lot of timing calls on the query path, and we could observe that in CPU profiles. Even without the extra timing information, Postgres still makes a lot of timing calls.

One easy way to see if this is affecting your performance is to run perf top and look for instances of pvclock_clocksource_read near the top. If you see it at more than a couple of percent, that’s time that could be better spent elsewhere. This is what happened to us.

But be aware: if you don’t see it in the profile, it doesn’t mean you’re not affected. If your system makes timing calls on its critical path, there could be some unnecessary latency introduced by the slow calls. The only real way to know is to try switching the clocksource and compare your performance indicators before and after the change. In our case, we used our shadow prod experimental setup to run production queries through a machine with the tsc clocksource, and as mentioned in the intro we saw a nice performance boost.

When abstractions leak

If you’re reading this, the idea of virtual machines is probably quite familiar. Instead of running software directly on a physical machine, with its physical CPUs and peripherals, there’s a layer of software that pretends to be the CPU and its peripherals.

On modern hardware, most of your code will run directly on the CPU, but some operations, eg getting the value of the timestamp counter, will cause the hypervisor to step in. For a huge number of use cases, this difference doesn’t matter much at all. But if a workload hits one of these emulated facilities or peripherals on a hot path, suddenly you might need to know about virtualization, clocksources, the vDSO, and the history of the timestamp counter.

Joel Spolsky has a classic blog post where he calls things like this leaky abstractions: situations where the you need to be aware of details the abstraction is meant to let you ignore.

Interestingly, there’s a different abstraction leak at play here as well: the clock_gettime(2) and gettimeofday(2) are system calls, but they might not go through the kernel’s syscall mechanism. When strace didn’t print out clock_gettime, that was was this detail leaking through. In our use case this leak doesn’t matter, but in another it might. For example, Spectre and Meltdown have shown us that access to precise clocks is an attack vector. The vDSO fast path means that the ordinary syscall filtering mechanisms won’t let you monitor or block access to those clocks.

Things are getting better

EC2 is switching to a custom hypervisor called Nitro, which is based on KVM. VMs using Nitro have kvm-clock as their default clocksource. This clock sources has an implementation that works with the vDSO fast-path. The m5 and c5 instance types are already using the new hypervisor, and you can see the effect on getting the time:

$ cat /sys/devices/system/clocksource/clocksource0/current_clocksource

$ strace -e gettimeofday,clock_gettime -- date >/dev/null

Nitro has lots of other benefits too, allowing much closer to bare metal IO performance for disks and network. Brendan Gregg has a great writeup on Nitro, where he says its overhead is ‘miniscule, often less than 1%’.

Presumably there will be a next-generation i-series instance type at some point, which would bring all these benefits to the high performance storage instances in our database cluster.

But until then…

… go check your clocksource!

It only takes a minute to see what your clocksource is, and not much longer to see if your system is spending lots of its time in If we’re spending 16% opvclock_clocksource_read. This could be a really easy performance win on your database machines. Go check it out! Have questions or other pro tips? Let me know @kamalmarhubi. And if you’re interested in this kind of work, we are hiring!