Heap’s Next Generation Data Platform: How we re-architected Heap to make it 5x faster

Since we started Heap in 2013, we’ve seen the use of behavioral data expand exponentially, especially at the enterprise level. This is great - at large companies, there’s no better solution for quickly understanding what your users are doing and why.

But managing all of that data comes with its own challenges: as the volume of data grows, queries get slower and slower. For our enterprise users, this can be a real problem. That’s why for the past year we’re been hard at work rebuilding the core Heap Data Platform from the ground up.

Introducing Heap’s Next Generation Data Platform. It’s an analytics platform fully re-architected to handle enormous datasets, and return answers immediately. We’ve been quietly rolling it out to an initial set of customers over the past few months, and are excited to announce the rollout to all customers beginning today!

For you this means: faster queries, greater flexibility, and access to even more advanced analytics capabilities like Heap Illuminate.

That said, rearchitecting from the ground up wasn't without its difficulties. Here are some of the challenges we faced, and what we did to overcome them.

Heap’s unique platform

From its inception, Heap’s product and platform have been built on the promise of a complete, retroactive, autocaptured dataset. These core capabilities are what make Heap so powerful, allowing teams to be more agile in how they capture and analyze information. But they also introduce significant challenges in designing a platform that performs at scale.

Autocaptured data: Heap automatically captures a complete behavioral dataset (views, clicks, taps, form submissions, etc.). This creates a powerful dataset for analysis, but also generates a significant volume of data: a typical Heap session tracks dozens of autocaptured events, much more than a legacy manual tracking platform would typically instrument. This means the Heap platform must be able to process and store billions of events every day in a format that allows for easy querying.

Heap Illuminate-powered insights directly from autocaptured data: Heap’s Illuminate capabilities proactively surface suggestions directly from your data, allowing you to find insights in data you might never have thought to manually track. This means we need to be able to query the autocaptured data in real time based on whatever you’re currently looking at, and not rely on pre-computed insights that don’t reflect the problem you’re trying to solve.

Retroactive, virtual events: Heap's platform provides the flexibility to define events on top of the autocaptured dataset and use them in analysis – for example, defining a conversion event based on button text and a page url pattern. These event definitions are retroactive, which means that Heap applies them back in time on your dataset allowing you to analyze from the beginning of data collection, not just from when you defined the event.

Retroactive user and account identification across devices and platforms: Heap also allows you to pass in a user identity in order to analyze their behavior across devices and platforms. This identity is also applied retroactively, allowing you to understand what a user did in the sessions before they signed up or converted. (note: user identification is done via a customer-provided identity, like an account id after sign up – Heap does not do any device or user fingerprinting)

All of this adds up to an incredibly complex platform to design, implement, and operate at scale.

Heap’s new platform

Heap has run for years on Postgres using the Citus extension to turn it into a massively distributed database. Postgres is a tried-and-true database that powers some of the largest products in the world, and we still use it wherever we can. But as our core analytical query system, we were starting to hit its limits at scale.

Heap’s original architecture stored every event in one place: a single giant distributed Citus table. We made heavy use of partial indexes to make it efficient to query virtual events, and partitioned data by user id to ensure that as much of the query workload as possible was pushed down to the individual nodes. This system had a few limitations: Postgres is row-based, which meant that data didn’t compress well and many types of queries were slower than they needed to be if they were stored in a columnar format. It also fundamentally coupled compute and storage, which meant we couldn’t scale them independently or take advantage of cheaper cloud storage.

The new system splits the architecture across two components, a Hudi data lake for offline processing, plus SingleStore as our core “live” analytics system. We chose SingleStore after an extensive evaluation of a number of different platforms, including the option of building something ourselves from scratch. We chose it for several key advantages:

Faster querying. While Postgres continued to hold up well on simpler queries, SingleStore stood out for its ability to join data across distributed tables. This significantly simplified our architecture (allowing us to store user and account data separately from the event data) and enabled us to massively speed up some of our slowest query types.

More efficient scaling. SingleStore separates where data is stored from where queries are run (the “separation of storage and compute”). It also natively supports columnar storage, which allows for much more efficient storage for an analytics workload. These two things combined mean that we can store data much more efficiently, taking advantage of cheap cloud storage, and scale out our query capacity separately from the underlying storage.

Flexibility. Heap runs some very complex queries, and we make heavy use of UDFs (user-defined functions) to push that complexity down into the database engine. SingleStore has both a powerful set of query primitives in SQL and support for us to create our own UDFs for more advanced cases.

In a follow-up post we’ll dive more into the technical details, but the end result is that we now have a platform that is faster, cheaper, and more flexible than our prior system.

Rolling out the new platform

For the past few months, we’ve been running the new platform alongside our existing platform, running the same queries on both systems and comparing the results. This has allowed us to verify that the new system is operating correctly, and to do direct comparisons of the performance at scale.

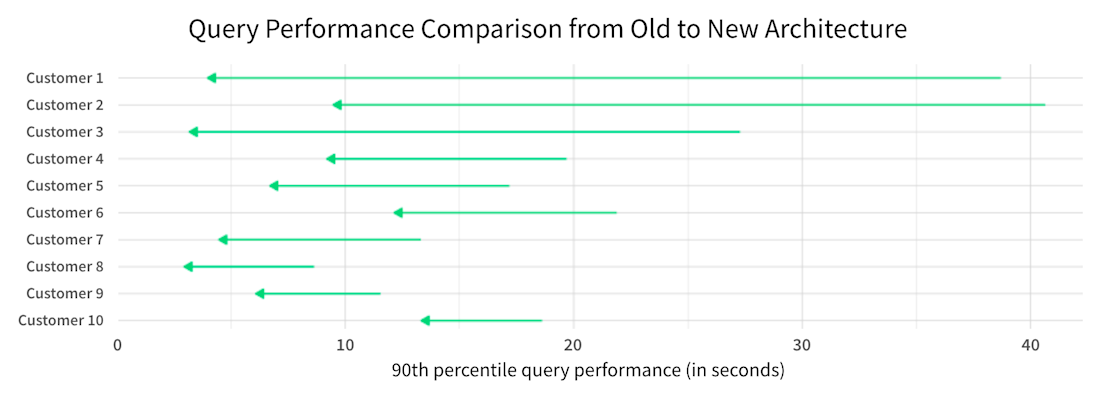

The biggest impact of the new system is felt by our largest customers, who are typically seeing 5-10x improvements in query performance on their slowest queries:

Comparing query performance of some of the first large customers running on the new system.

We’ve also achieved our goals around data latency, meaning that the data is more up-to-date when you query it. This is important for customers who are trying to make realtime decisions based on user behavior. And we’re seeing decreased costs as we roll customers over to the new system, particularly in data storage.

We’re just starting to scratch the surface of what’s possible with this new architecture – we’re still finding new performance tweaks just about every day, and are rolling out several new query abilities that take advantage of the new architecture to customers who are already on it. We’re excited to push the limits of the system over the next few months as we complete the roll out to all customers.

Try it now

We’re still putting the new platform through its paces, and are excited to have as many customers as possible trying it out. If you’re an existing customer, there’s no need to do anything – we’re rolling through the customer base and migrating existing customers as fast as we can. The change is completely transparent so all you should notice is queries getting faster.

If you’re not yet a Heap customer, give it a try: you can sign up for a free trial of Heap and you’ll automatically be started on our new platform.

Getting started is easy

Start today and elevate your analytics from reactive reporting to proactive insights. What are you waiting for?