How We Found a Missing Scala Class

Here’s a fun tale of debugging that starts off with a common alert nagging our on-call rotation, leads us to a very confusing NoClassDefFoundError, and ends up with us knowing a lot more about dynamic tracing in the JVM.

An on-call annoyance

We use Flink to compute near-real-time aggregations based on incoming events. Flink is a JVM framework for handling streaming data. Over the last several weeks, we’ve had to restart one of our Flink jobs very often. We looked into the issue a number of times as part of our on-call duties, but we didn’t manage to solve the issue. Eventually, we decided to dig deeper and find the root cause.

NoClassDefFoundError? But it’s right there!

When we investigated the error more deeply, we were surprised to find a NoClassDefFoundError for a class that was clearly in the JAR that Flink was running. We could see it, and even weirder, that class would load successfully earlier in the same process.

The error we were seeing resulted in a stack trace that looked something like this:

Our Flink code is written in Scala, and this particular class is part of the rediscala library we use for interacting with Redis. We run our Flink jobs by sending a “fat JAR”, which is a JAR of our code plus all of its dependencies. The rediscala library is assembled into this JAR, along with the class in question. We confirmed this by running javap on the file:

The class is in the same JAR as the rest of our code, so it’s definitely in the classpath. Why can’t Flink find it? To understand what was going on, we need to go on a detour into how the JVM loads classes.

JVM Class loading 101

Class loading in Java (and hence, Scala) is lazy – instead of loading all existing classes during application startup, the JVM only loads the subset that is necessary to start the program. As code is executed, more classes are loaded.

Let’s look at an example:

The JVM has a -verbose option that prints a line for every class that was loaded. This is the output we get if we run our example code with this option.

The verbose output shows that the TreeMap class was only loaded when we tried to create an instance of it. It wasn’t loaded when the program started, as we can confirm by the fact that the first println happens before the class loading.

You may be wondering about the imports in the beginning of the code and how they factor into this. These don’t do any class loading. They just tell the compiler “hey, the fully-qualified name of the TreeMap class is actually java.util.TreeMap. If someone tries to use a TreeMap without specifying a package, use the one defined in java.util.”

The snippet above is a good example of what is known as “implicit class loading” (as opposed to “explicit” class loading, e.g., calling Class.forName). Implicit class loading is what happens when a class is loaded because of reference, instantiation, or inheritance. Above, the TreeMap class is loaded because we’re creating a variable of that type. The JVM also ends up loading the SortedMap and NavigableMap interfaces because the TreeMap class implements both of them.

When a class cannot be found at runtime while attempting to load it implicitly, we get a NoClassDefFoundError. This means that the class existed when we compiled our application, but it can no longer be found at runtime. This could be because we messed up our classpath, or because our JAR is incomplete. Or it could be for a completely different reason – as is the case here!

Back to the stack trace

As a refresher, this is the beginning of the stack trace we’re trying to decipher:

We see the now familiar NoClassDefFoundError – let’s look at the code that causes it:

The code on lines 69-71 defines a lambda function. Scala lambdas are implemented as classes under the hood [1]. Hence, in order to execute this code, the JVM needs to load the class in question – and that class is the RedisClientActor$$anonfun$onConnectionClosed$1 from our stack trace. We already confirmed it’s present in the JAR, so we need to look elsewhere for the root cause.

If we read a bit further down the stack trace, we see the following:

The Javadoc for the URLClassLoader.findClass method includes an interesting tidbit:

Notice the “… if the loader is closed” part. This part isn’t included in the docs for ClassLoader.loadClass or the other callers of findClass, making it a bit tricky to uncover. It means that a NoClassDefFoundError does not necessarily imply a class is missing! It could also be caused by a closed URLClassLoader You might be wondering why a class loader would need to be closed. The ClassLoader class doesn’t even have a close method. However, URLClassLoaders are a bit different and got the close() method added in Java 7. Flink wants to close class loaders in order to ensure any file descriptors are closed and the cached libraries are deleted immediately.

Instrumenting class loading with Btrace

To find out if we were calling findClass on a closed classloader, we needed a bit more detail on class loading than -verbose gives us. We reached for btrace – a dynamic tracing tool for the JVM. BTrace allows you to attach to a running Java process and instrument relevant classes, with no restarting or changes to the application code needed. This makes it invaluable in debugging hard-to-reproduce bugs, concurrency issues, and performance problems.

To test our hypothesis, we decided to log all findClass and close method calls on the URLClassLoader class. In order to do that with BTrace, we wrote a BTrace script – that is, a class with the @BTrace annotation. We could then add static methods that are invoked at different points in the lifecycle of the instrumented application. We used a couple of methods annotated with @OnMethod, specifying the class and method we’d like to learn more about:

Our onFindClass method will now be invoked every time the instrumented application calls URLClassLoader.findClass. We then told BTrace that we’d like to know the class loader instance on which the method was called, the class and method names, and the argument to the method (that is, the class name we want to load). We used BTrace annotations for that, too.

The full list of BTrace annotations can be found here.

We ended up with the following BTrace script:

We log the particular class loader instance on which each call is performed in order to get a better understanding of the method call sequence on each loader. Here’s the output from a Flink job run that exhibited the error, with our BTrace script attached (formatted for readability):

What we see here are the BTrace-instrumented logs from two method calls we care about: one from close() and one from findClass(). The log tells the story we suspected! Notice that findClass() is called with exactly the same class that appears in our stack trace: redis.actors.RedisClientActor$$anonfun$onConnectionClosed$1. The other important part is the @7ca2eee9 reference – this tells us that both of these method calls were executed on the same ClassLoader. And, as suspected, the close() method was executed before findClass(), causing the NoClassDefFoundError to be thrown.

Going from the proximate cause to the root cause

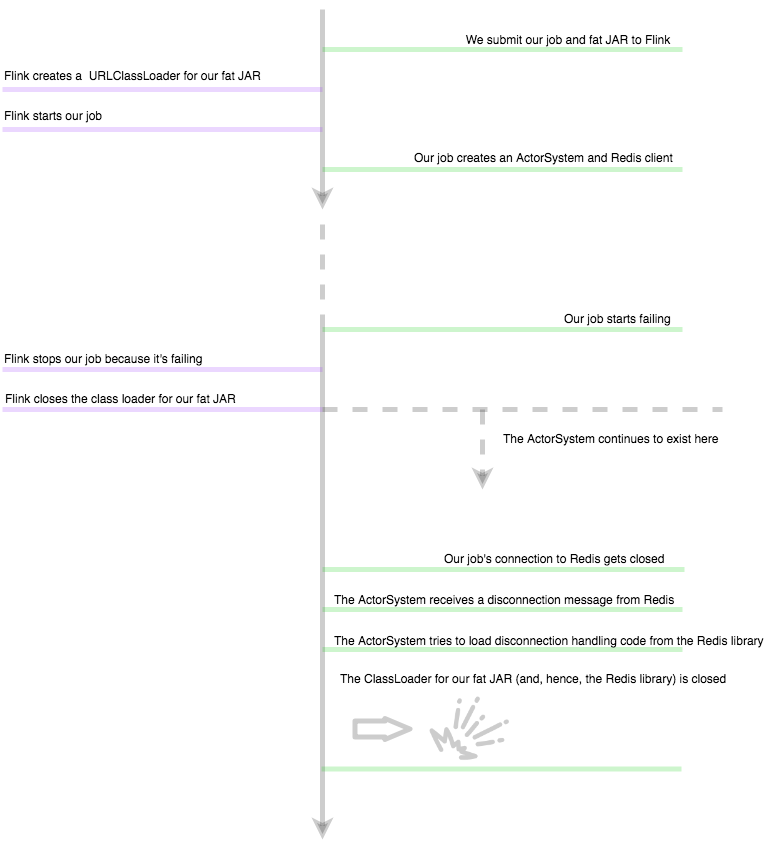

Okay, now we know what happens at the ClassLoader level, but let’s try to translate that to application- and framework-level terms. When Flink executes our application, it creates a custom FlinkUserCodeClassLoader for our fat JAR. This ClassLoader is used to load all classes from our application code and libraries. If our application starts erroring out at some point throughout its execution, Flink will cancel it and close this ClassLoader.

Our Redis client library uses an Akka [2] ActorSystem internally, and this is where messages from Redis get processed. Since an ActorSystem is relatively heavyweight, we keep a singleton ActorSystem and use it throughout our application. However, there is no logic to handle closing the ActorSystem when our application dies. This means that the ActorSystem is independent from our Flink application lifecycle.

As a result, nothing changes from the point of view of our ActorSystem when Flink stops our application. The ActorSystem just keeps processing messages – including, for example, Redis disconnection messages, since we don’t close our Akka-based Redis client. In order to process a disconnection message, the ActorSystem needs to load the relevant code from the Redis library. However, this code is only present in our fat JAR, and the ClassLoader for that has already been closed!

Timeline of the bug

The solution for this type of problem is to be more careful about playing nicely with the application lifecycle determined by the framework or environment. In the case of Flink, this meant using a RichSinkFunction for our Redis client. This allows us to execute the code for shutting down the Redis client and the Akka ActorSystem at the right moment.

While this was a tricky issue to figure out, we’re happy that we ended up with an implementation that is more in line with how the framework is supposed to be used. We also got to add a powerful tool to our arsenal: BTrace.

If you’re interested in using powerful tools to build powerful software, we are hiring! Or, if you have any questions, feel free to reach out to me on Twitter: @IvanVergiliev.

Footnotes

[1] This is not the case for Scala 2.12 – see http://scala-lang.org/news/2.12.0-M2/ for an announcement. However, we’re not using Scala 2.12 yet. If you interested in the way a lambda expression looks under the hood, check out https://blog.takipi.com/compiling-lambda-expressions-scala-vs-java-8/, for example.

[2] Akka is an implementation of the Actor model for Scala.https://akka.io

References

https://www.ibm.com/developerworks/library/j-dclp1/index.html